Overview

When enabled, Elementary automatically streams your workspace’s audit logs (user activity logs and system logs) to your GCS bucket using the Google Cloud Storage API. This allows you to:- Store logs in your own GCS bucket for long-term retention

- Integrate logs with BigQuery, Dataflow, or other Google Cloud analytics services

- Maintain full control over log storage and access policies

- Process logs using Google Cloud data processing tools

- Archive logs for compliance and audit requirements

Prerequisites

Before configuring log streaming to GCS, you’ll need:-

GCS Bucket - A Google Cloud Storage bucket where logs will be stored

- The bucket must exist and be accessible

- You’ll need the bucket path (e.g.,

gs://my-logs-bucket)

-

Google Cloud Service Account - A service account with permissions to write to the bucket

- Required role:

Storage Object User(roles/storage.objectUser) - You’ll need to generate a service account JSON key file

- The service account key file must be uploaded in Elementary

- Workload Identity Federation: Support for Workload Identity Federation with BigQuery service accounts is coming soon

- Required role:

Configuring Log Streaming to GCS

-

Navigate to the Logs page:

- Click on your account name in the top-right corner of the UI

- Open the dropdown menu

- Select Logs

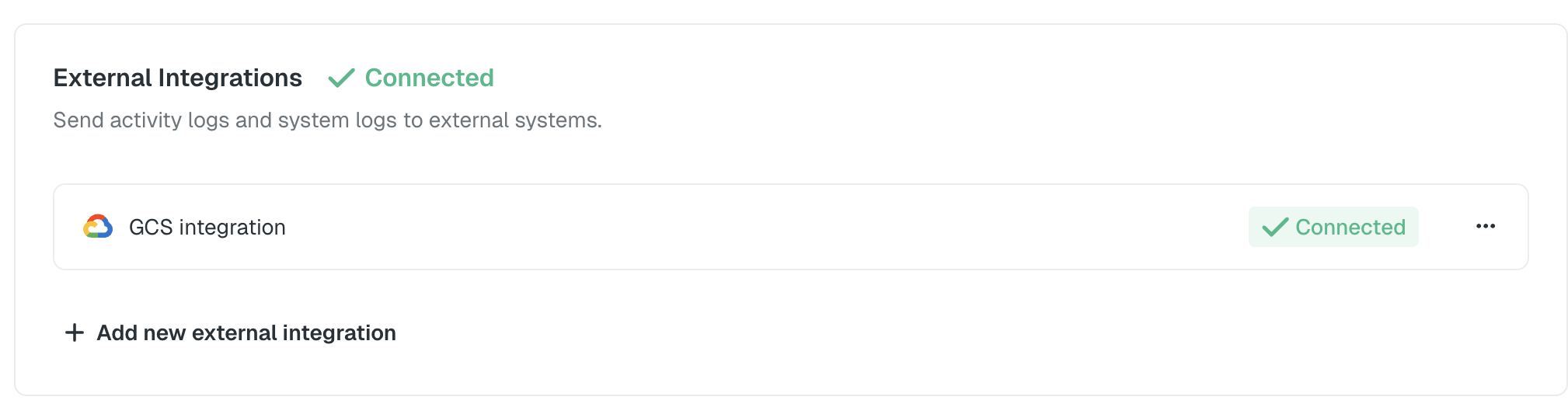

- In the External Integrations section, click the Connect button

- In the modal that opens, select Google Cloud Storage (GCS) as your log streaming destination

-

Enter your GCS configuration:

- Bucket Path: The full GCS bucket path (e.g.,

gs://my-logs-bucket) - Service Account Key File: Upload your Google Cloud service account JSON key file

- To generate a service account key file:

- Go to Google Cloud Console > IAM & Admin > Service Accounts

- Select your service account (or create a new one)

- Click the three dots menu and select “Manage keys”

- Click “ADD KEY” and select “Create new key”

- Choose “JSON” format and click “CREATE”

- The JSON file will be downloaded automatically

- To generate a service account key file:

- Bucket Path: The full GCS bucket path (e.g.,

- Click Save to enable log streaming

The log streaming configuration applies to your entire workspace. Both user activity logs and system logs will be streamed to your GCS bucket in batches.

Log Batching

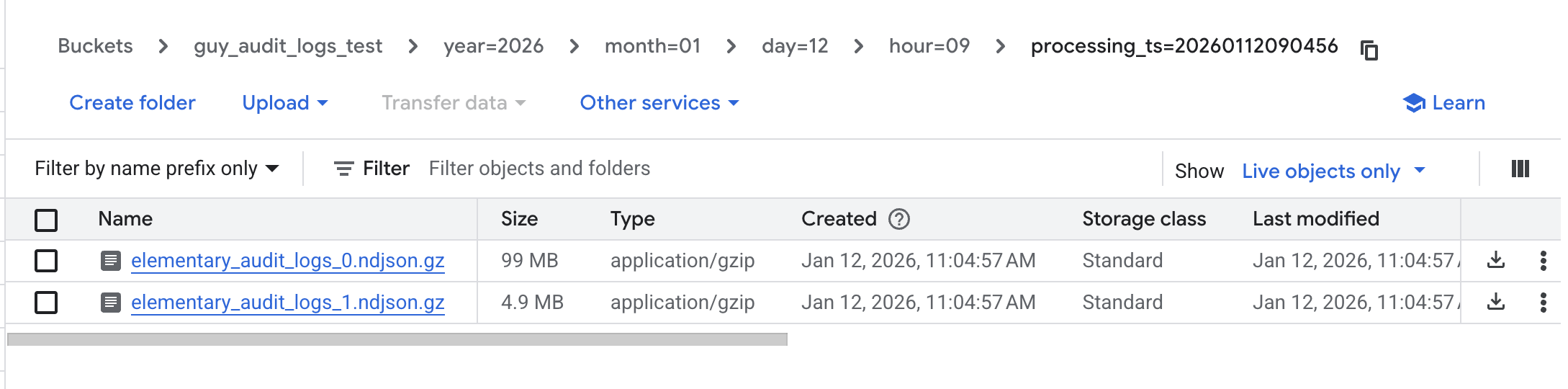

Logs are automatically batched and written to GCS files based on the following criteria:- Time-based batching: A new file is created every 15 minutes

- Size-based batching: A new file is created when the batch reaches 100MB

File Path Format

Logs are stored at the root of your bucket using a Hive-based partitioning structure for efficient querying and organization:{log_type}: Eitheraudit(for user activity logs) orsystem(for system logs){YYYY-MM-DD}: Date in ISO format (e.g.,2024-01-15){HH}: Hour in 24-hour format (e.g.,14){timestamp}: Unix timestamp when the file was created{batch_id}: Unique identifier for the batch

Example File Paths

- Efficiently query logs by date and hour using BigQuery or other tools

- Filter logs by type (

auditorsystem) - Process logs in parallel by partition

Log Format

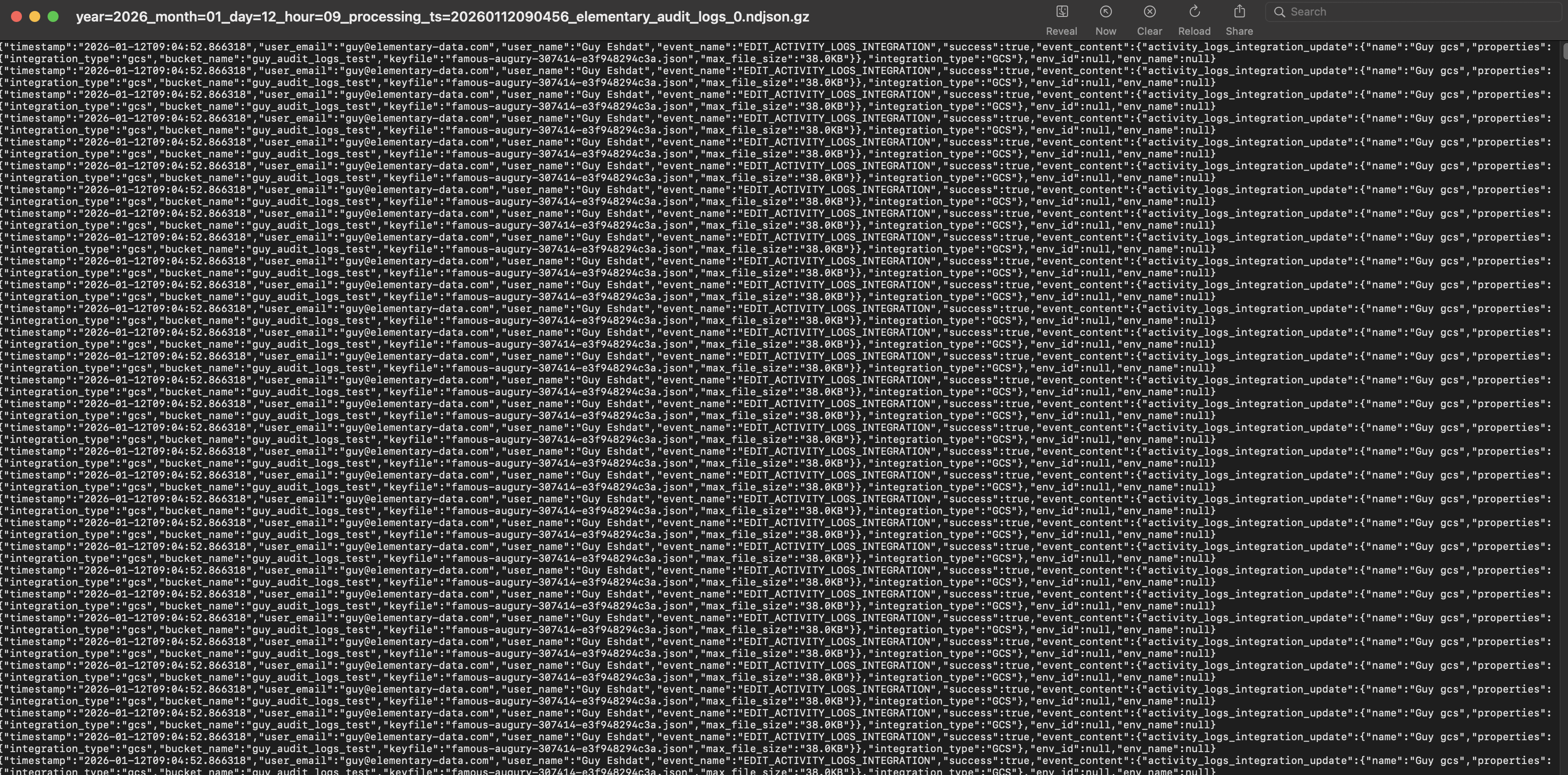

Logs are stored as line-delimited JSON (NDJSON), where each line represents a single log entry as a JSON object.User Activity Logs

Each user activity log entry includes:System Logs

Each system log entry includes:Field Descriptions

timestamp: ISO 8601 timestamp of the event (UTC)log_type: Either"audit"for user activity logs or"system"for system logsaction: The specific action that was performed (e.g.,user_login,create_test,dbt_data_sync_completed)success: Boolean indicating whether the action completed successfullyuser: User information (only present in audit logs)id: User IDemail: User email addressname: User display name

env_id: Environment identifier (empty string for account-level actions)env_name: Environment name (empty string for account-level actions)data: Additional context-specific information as a JSON object

Disabling Log Streaming

To disable log streaming to GCS:- Navigate to the Logs page

- In the External Integrations section, find your GCS integration

- Click Disable or remove the GCS configuration

- Confirm the action